Use your data more effectively: why you should combine your analytics and MMP data

Anyone who buys traffic for their mobile app is constantly trying to find better answers to the question “how can I acquire users more effectively?” Those who follow our content won’t be surprised to know our advice: better utilize your available data.

A tool commonly used by app markers is the MMP – or mobile marketing platform – the primary purpose of an MMP is to help developers track installs from a campaign, and increasingly, track the return of earnings from the campaigns. To the marketer, the MMP is a key tool for tracking app marketing success, but it doesn’t solve all problems. In the unending quest for growth a marketer needs to find new acquisition sources, identify successful campaigns earlier, optimize poorly performing ones better, and continually find and scale better users for their app.

App marketers often overlook a key asset in their organization — the data generated by in-app analytics tracking in-app user behavior. While there is some overlap between MMP and analytics functionality— some marketing platforms can track events and analytics platforms can be used for campaign tracking – the depth of each is generally lacking. Most mobile apps acquiring users have both in-app analytics and an mmp, but often marketers don’t look at these two data sources as complimentary. In fact, when talking to clients we find marketing teams don’t leverage analytics, a distressing amount of marketers don’t even have access to the analytics data. This is a missed opportunity. Today we’ll outline why and how marketers can be more effective when combining analytics and marketing datasets.

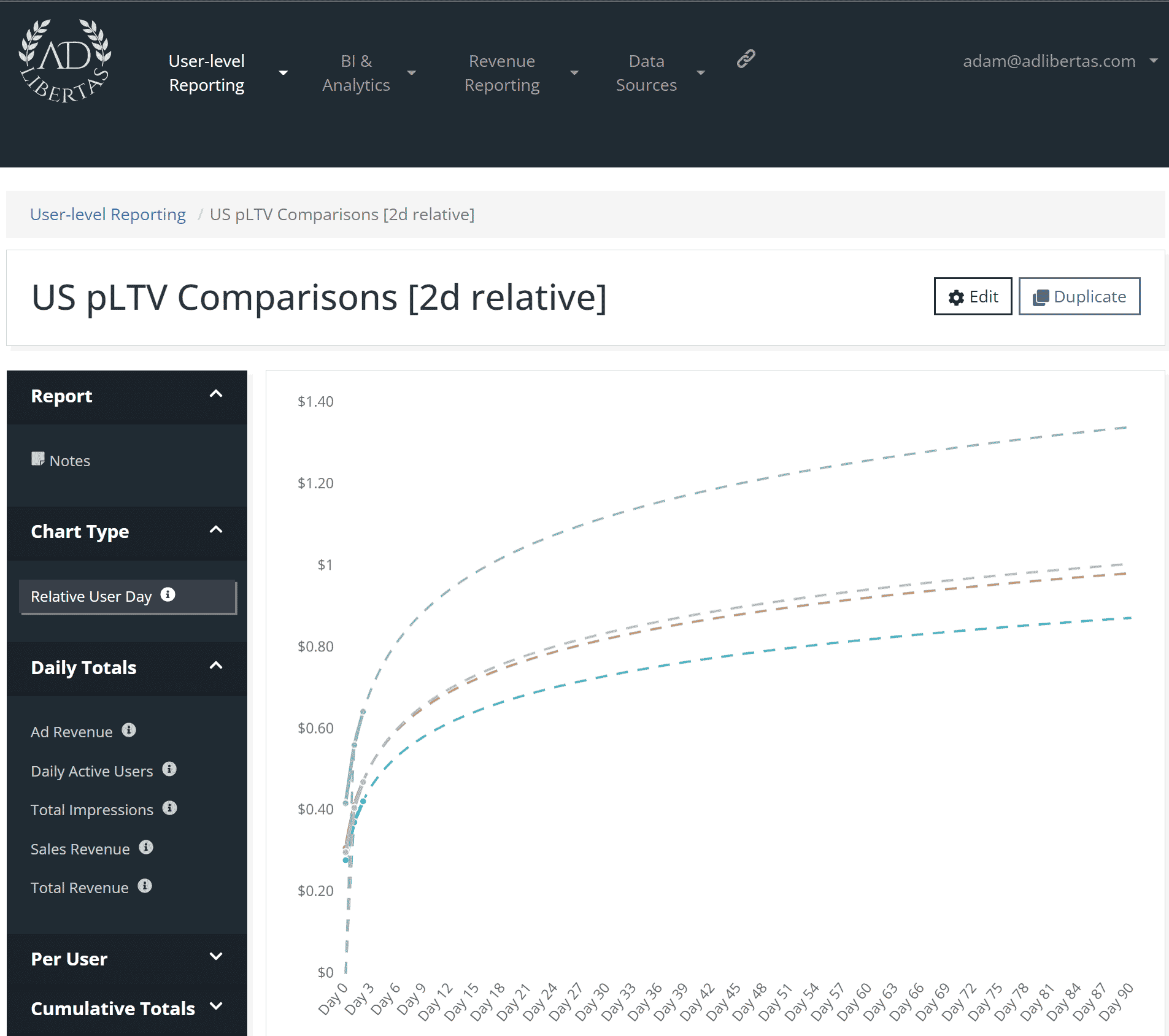

Predictive modeling of LTV for users with 2 days of history. Related.

Measure campaign success earlier

Few apps can claim to be able to collect the full value of a user’s worth on a user’s first day. Most need to wait weeks– in some cases years– to reach the full lifetime user value (LTV). This means app developers would need to wait an unrealistically long time to measure actual ROI or return on ad spend (ROAS) for user acquisition campaigns. This, combined with shifting market prices, app functionality changes, and campaign optimizations, means marketers are constantly looking for earlier indications—or predictions—of campaign success. As a corollary, this is actually why ad platforms – like Facebook – have a maximum of a 7-day window to send back optimization signals, and actually recommend you send back signals within 24 hours.

So app marketers are constantly looking for earlier signals on campaign success. This is why an MMP allows – and encourages– you to include key events that indicate a highly valuable user after an app install. This is helpful when there’s a clear dollar value associated with the event – like an in-app purchase (IAP). But the IAP event isn’t sufficient in most cases. First, most apps can only boast a subset of users who trip a purchase event. This means your signal is (a) delayed until they purchase and (b) limited to only apply to the subset of conversions. Delay aside, limited signals can be very detrimental to helping track campaign success. If 10% of your user’s purchase, this means you’ll need to aggregate 10X more users (or wait 10X longer) to get signals on the value of a campaign. To make matters more difficult IOS (and soon Google) has privacy thresholds on tracking campaign signals, limiting success metric signals can degrade your measurements even further.

For this reason, leaders in the space suggest using early user events to help predict user signals, then feeding these events back to the MMP. Ideally, events happen more often and sooner than an IAP event. The concept is straightforward: find user events that signal future value and use them to optimize your campaigns. But how do you choose the right in-app events and how do you assign a value to these events?

Enter your analytics data.

Only by combining analytics and marketing data will you understand which events are valuable. In previous articles, we dive deeply into strategies for choosing an in-app event for signals, but a key takeaway is:

Of course, the ultimate event is an in-app-purchase conversion, however since most apps don’t see higher than a single-digit conversion during the first day, the signal of a “valuable user” will be so low, it’ll likely prove ineffective. Therefore the most effective event (1) will be early in the app, (2) fired by a comparatively large number of users, and (3) will signal a high-value user.

The key to success is finding events that meet these criteria. As a first example, we can look at a subscription trial. Crudely, you could use back-of-the-envelope math: 15% of trials turn into purchases, therefore the trial opt-in is worth 15% of the IAP value. A more advanced — but more effective method is by tracking earlier and more often occurring events — like segmenting users who’ve played an above-average number of puzzles on day 1, and finding out they’re worth double, or segmenting by a user’s time in the app on day 1 are both options of future success.

Only by combining datasets that capture user behavior – the analytics – and using them for campaign optimization can you use these early in-app events for campaign optimization.

App Personalization

Experts generally agree the ability to measure the success or failure of unique users from campaigns will diminish, changing the method for growing an app to hinge less on the marketer’s ability to target the acquisition of high-value users and more on personalizing the app to provide better (more effective) in-app experiences for users.

It is incumbent on app developers to find ways to parse apart the broader, more heterogeneous accumulations of users that are presented to them as cohorts by ad platforms into meaningfully-defined groups. These groups can then be exposed to appropriately differentiated in-app product treatments, designed to optimize the user experience at the smaller group level.

The abovementioned article references a case study that increased game revenue by 10% by using in-game signals as inputs to a personalization engine. AdLibertas customers have seen similar success in simple AB tests, like a puzzle app that changed puzzle complexity and increased LTVs by 10%.

AB testing isn’t a new paradigm for app developers, in-app personalization can be viewed as an extension. Except instead of testing app functionality on a random sample of users, app developers will be creating a customized experience for a set group of users. A high-level example is users who show indicators of being high-value may not be exposed to ads to reduce the risk of cannibalization and increase the chance of purchase conversions, in a more advanced situation apps may start to personalize the onboarding of an app depending on the acquisition channel (e.g TikTok users might see a different sign-up or login method than users who’ve been acquired via Instagram). In one recent example, an AdLibertas client gated an incentivized in-app action for high-converting users, resulting in higher reward video revenue, and higher user engagement while still maintaining the lion’s share of conversions. Only by combining the MMP campaign data with your analytics data will you be able to measure and take action on app personalization by campaign or source.

Find fraud & understanding campaign problems

Problems with campaign data are unfortunately never far from a concern of a marketer. Bogus installs, bots, and discrepancies with SANs make measuring the true impact of an ad campaign difficult.

Fraudsters, clickfarms, and other sources of bogus installs are constantly incentivized to increase the complexity of their attacks, staying a step ahead making finding them one long game of whack-a-mole. However, by merging a user’s in-app activity with the campaign data you can get more signals on finding and identifying the invalid traffic early. An AdLibertas customer recently was chasing the source of poor performing ROAS from a campaign source, after investigation, they found a source of traffic was generating installs but showing 0 user activity after the install event. Obviously something fishy was going on; tying the user activity to the campaign source allowed them to quickly identify and remove these campaign sources, saving them thousands in ineffective marketing spend.

Not all campaign issues are caused by malicious intent. In a recent example, we had a client buying a very cheap source of traffic but after investigation, identified users all had multiple sources of country location. Only after looking at user activity across multiple sessions did they realize the source was a VPN app and the expensive US users were installing using the VPN only to “revert” to their country of origin on subsequent next app use. Not having a source of truth on activity and campaign-level data can make this type of investigation very difficult.

While problems, questions, and discrepancies will continue to plague marketers, combining user analytics with your marketing data will allow you to see the errant or problematic behavior of users after the install, helping you track down and rectify the issue.

How do you combine these datasets?

Method 1: DIY – Data import

Some major analytics platforms (Amplitude, MixPanel) have the ability to ingest external data, whether these are from other analytics platforms, in-app purchases, and – most relevant, MMP data. This will allow you to pull in user cohorts & campaigns to track against user actions. On the other side, it’s rarer to have the ability to pull externally generated events into an MMP.

Pros:

– Simple integration. Limited technology investment needed.

Cons:

– Your vendor may not offer support your given platform, most notably Firebase doesn’t directly connect and will need customization.

– Critically, while you may be able to integrate the data, the flexibility of import may have limitations on data you can ingest, and how you can make that data actionable: for example, tracking a user’s in-app ad revenue.

– Costs/data caps could make this approach untenable

Method 2: DIY – Raw Data Export & ELT

For the app developer — or publisher– looking to build out their own data architecture, building custom data pipelines to export the raw data, then combining, and storing this data will give maximum flexibility. There are a number of methods organizations are employing depending on their level of sophistication.

Pros:

– You maintain control of the data

– You can make as little, or as much, investment as your organization desires.

Cons:

– It’s complex. You’ll be building and maintaining control of the data infrastructure. You’ll need the in-house expertise to design, build and maintain the big data architecture.

– Cost can swell, both storage and access will get expensive as you increase the size of your collected data.

Method 3: Use a third-party

AdLibertas uses APIs to pull and unify all data into a single location: an Amazon account you control. Then we give you access to easy-to-use analytics to explore the data and create reports, and –for the hardcore analysts– we offer direct SQL access as well.

Pros:

-It’s faster. Since we’ve pre-built all the data pipelines, we can often spin up a customer in a day.

– It’s more cost-effective: there’s no development cost, and since you’re sharing data processing with others, we get economies of scale that mitigate our processing cost, allowing us to pass savings down to you.

– There’s no technical investment: no developers are needed to get it running, no SQL is needed to access the data, so the entire team can use it.

Cons:

– You’re not cutting out technologies: Since we combine– not generate– data, you’ll still need to pay for your MMP & analytics platform.

– You’ll still need to act: data is a tool, it’s worthless just sitting around but in the right hands it’s transformational. Like all the solutions, you’ll need to act on your findings.