The AdLibertas Approach to Big Data

The AdLibertas Approach to Big Data

Allen Eubank, CTO, AdLibertas

The AdLibertas Platform uses Trino to give customers unparalleled insight into their mobile app user-level analytics without managing their own data lake or data engineering team. We provide access to big data analytics without the headache of operating a big data cluster or writing SQL queries.

Our customers have tens of millions of users, each generating actions, ad impressions, revenue events that all add up to terabytes of data generated daily. Big data is hard. It needs a dedicated engineering team which requires a large upfront investment. Then, data teams are tasked with designing and building data pipelines to satisfy business requirements and goals. And, as soon as the requirements change, new pipelines and infrastructure have to be (re)built to accommodate, leading to more cost.

Giving mobile app developers access to big data without the upfront cost of building a data team is what we ended up accomplishing with the infrastructure and architecture supporting the AdLibertas platform.

How big is big data?

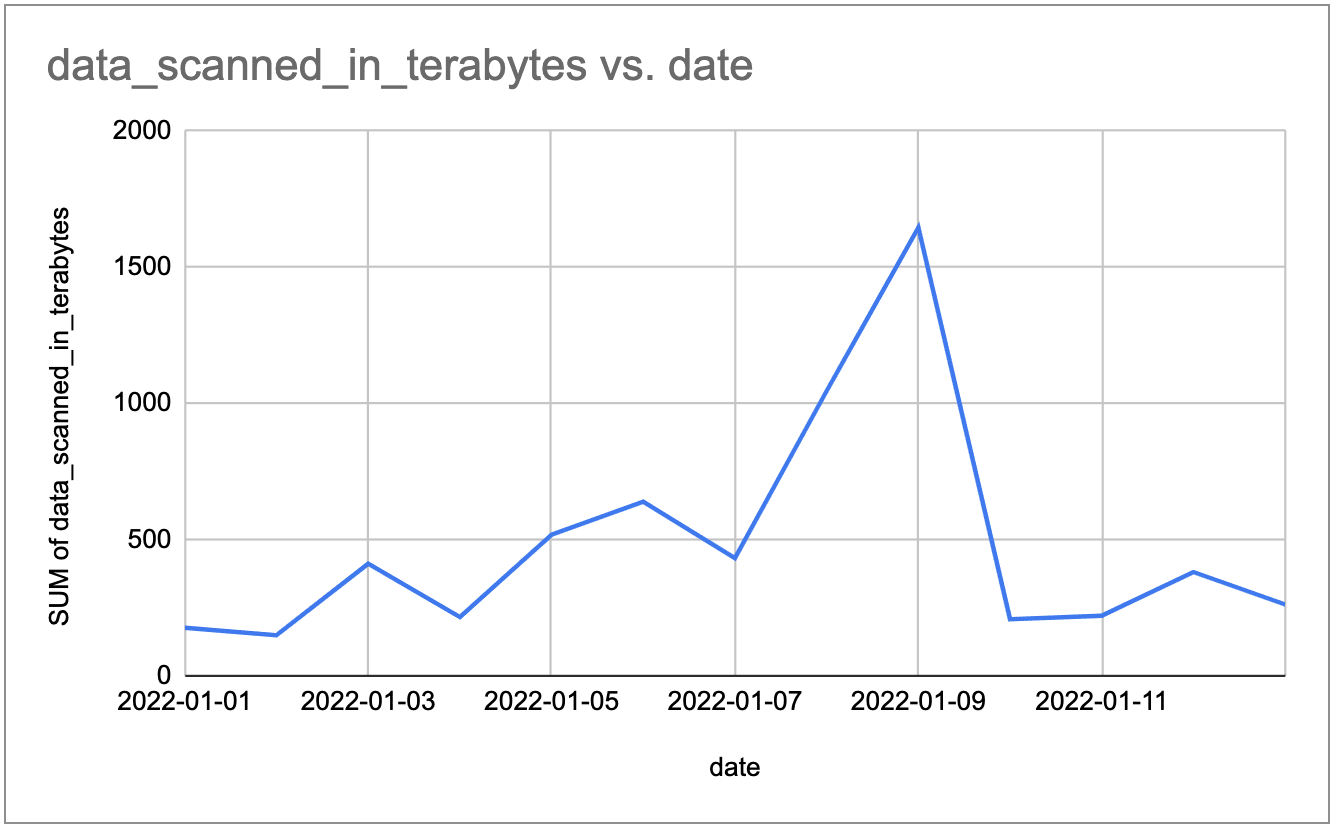

To understand the level of scale that we are building for. It’s easiest to start with where we are today. A typical day of data processing needs to ingest and process hundreds to thousands of terabytes to provide deep user-level analytics.

Data Scanned in Terabytes / Day

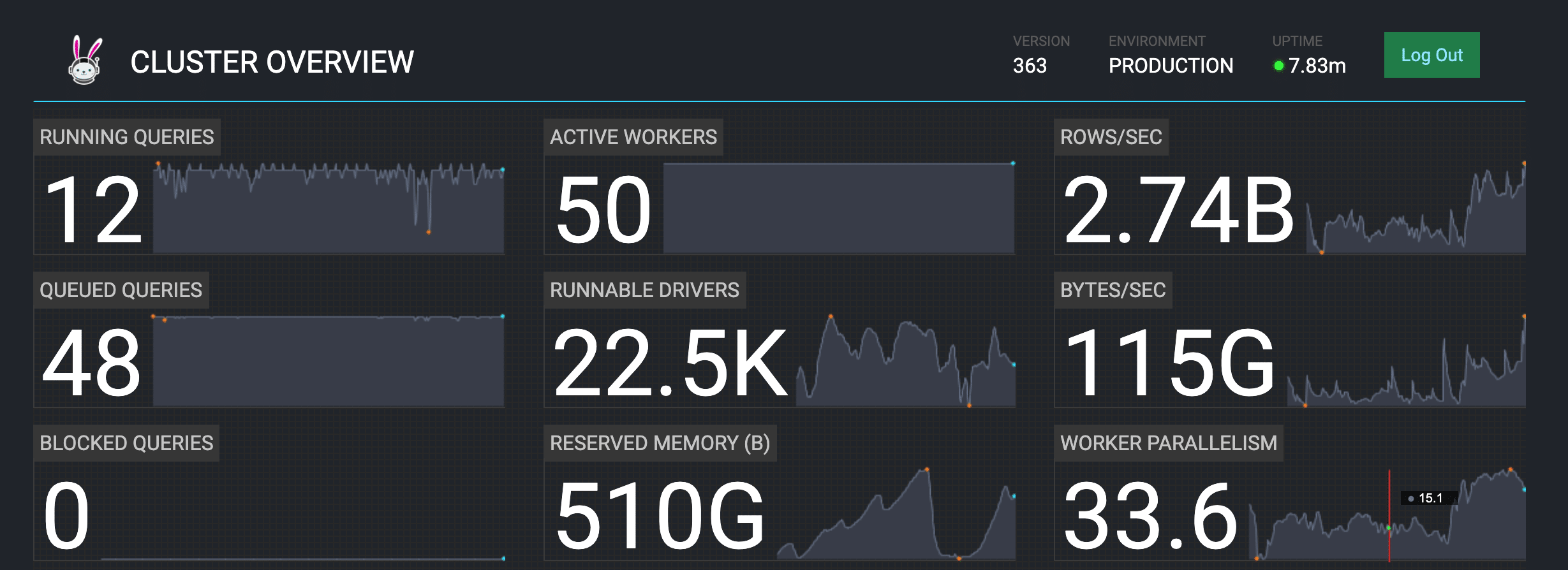

Here’s a snapshot of our Trino cluster during one of our peak times:

Live metrics from our Trino coordinator

As you can see, our cluster needs to scale up to handle 2.74 billion rows / second and transfer 115 gigabytes / second. Some queries are so massive that at times, the peak reserved memory tops 4.5 terabytes (across the whole cluster) and 2.5 petabytes cumulatively (across the query stages).

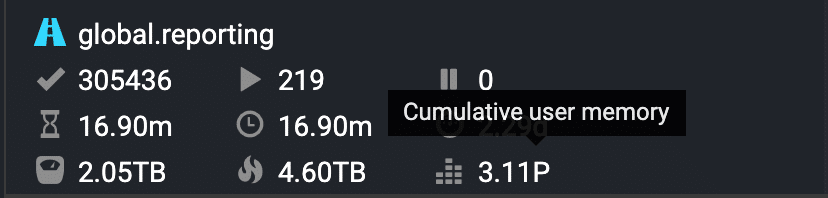

The following is some example stats from one of our user-level reporting queries.

P is for Petabyte

We strive for data democratization

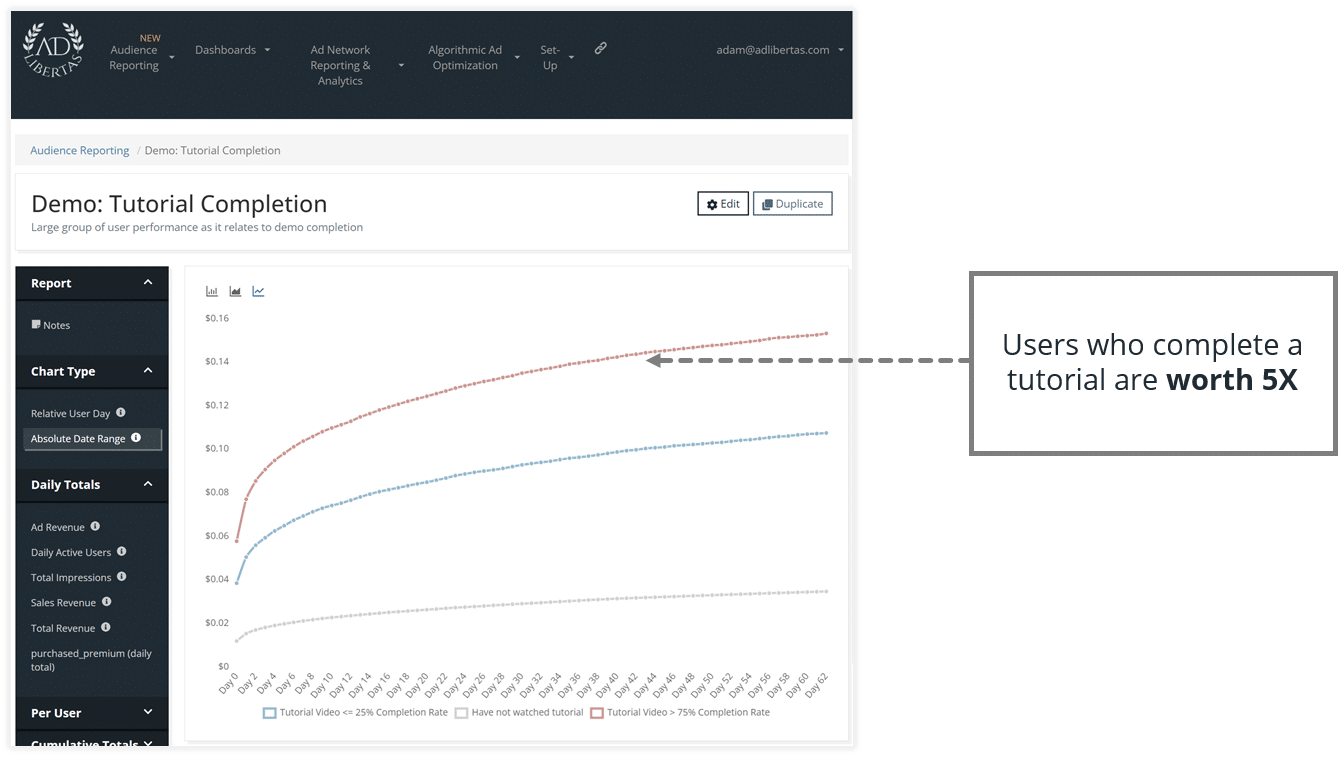

At AdLibertas, we aim to provide more than just a “data warehouse/lake/cloud,” we wanted to give business users the ability to think in new ways about how to measure success in their app. Take an example a customer asked us “how valuable is a user who completes a tutorial in my app?”

To answer this, typical data cloud services would require the upfront cost of hiring and managing a data team –which comes with its own headaches. Data teams would then be tasked with building and operating various data pipelines which can take months or years.

Most organizations have hypotheses about what they should do to their app. Each hypothesis typically requires a data engineer (maybe a few) to provide the data pipeline to test the hypothesis. Each of these pipelines is essentially a new project and may have their own unique headaches and challenges.

Our goal is to enable the business user to answer these questions themselves, without needing a dedicated team.

We aim to enable everyone in the organization to get accessible, actionable insights into user behavior and performance.

AdLibertas Big Data (User-level) Architecture

Our architecture has needed to change and grow as we learned more about what data our customers care about. It started with simple reporting “what is happening” and has grown to measuring behavioral performance “what is the predicted dollar impact to customer action?” To accomplish this, we needed data to be collected and unified at the user-level.

As you can imagine, user behavioral performance is not an easy thing to measure. First, you have to have a reliable method of collecting each data point (events such as install, purchase, engagement, impression, etc.). Next, you need a method of moving and transforming that data to fit your requirements and goals. Lastly, you need to make accessible and be sure it works day in and day out at increasing levels of scale.

A Trial with AWS Athena

Initially, we started with AWS Athena. Athena was a light introduction into how to start thinking about big data. Athena ended up not being cost effective for our user level analytics. Athena charges based on the data scanned ($5/TB) which means medium-sized daily cost would add up to millions of dollars a year (500 TB * $5 * 365 days = $912,500)!

AWS Athena allowed us to move quickly and decide on an architecture that would scale to meet our customer’s demands. But, we quickly hit the ceiling on feasibility. That said, we still use it today for some of our smaller ETL jobs.

Trino on K8S

Fast forward to today, we now provision our Trino clusters using Kubernetes on AWS Elastic Kubernetes Service (EKS). The relative ease that we are able to start and stop our massive cluster (each worker has 64 vCPU, 512 RAM, and 2 x 1.9TB SSDs attached) is a testimony for AWS being one of the top tier cloud providers. We regularly max-out spot capacity for an availability zone but due to the nature of our Kubernetes configuration, it is quite easy to deploy to new availability zones.

Trino + AdLibertas

In the future, I hope to shine some more light on how we set up and run an effective Trino cluster but suffice to say, if you are going to include big data in your business, Trino is a great choice. It’s cost-effective (provided you know what you’re doing), flexible in what it can do with data, and it’s free and open-source (FOSS should be reason enough 😊). Trino has become a pivotal part of our business so much so that I’ve become a contributor to the repository!