Latency | The Cause, Effect and Recommendations for mobile apps

Introduction

Latency has been an issue since the earliest days of online advertising. Because of the distributed nature of the phone’s network connection– latency on mobile is even more of an issue than traditional online advertising. This article is designed to outline the main causes, the effects and the best methods for dealing with latency in your mobile advertising strategies.

What is Latency?

According to the IAB latency is defined as “the delay between request and display of content and an ad.” This delay is caused by the culmination of three steps:

- The ad request – this is the initial request to the network, mediator or demand source for an ad.

- The ad network processing time – is the time the network takes to respond with a buying decision.

- The delivery or relay of the ad – either the delivery of the advertisement or the network’s decision to pass on the request (often called a no-bid).

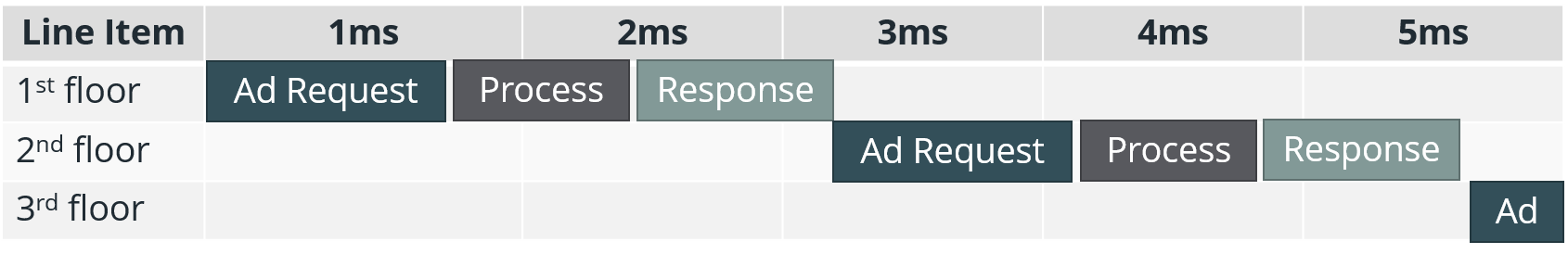

In a traditional ad waterfall an ad request cascades down different price points (line items) where each line item requires a query and response for each ad request . Each line item’s individual request will take time to process before ultimately returning a response, usually either with an ad or a no-bid response. Processing the request often involves jumping between multiple networks, each with its own opportunity for adding additional latency.

Additional reading: why different price points are important to your monetization strategy.

In the example above the first call invokes a request, processing and response time. Each subsequent price point increases overall latency.

More modern bidding approaches may be called by different names (header-bidding, RTB, pre-bid, marketplaces)— but they rely on the same concept: a centralized ad request is exposed to synchronous bidding where the winner (highest bidder) is allowed ad delivery. This means the ad request is subject to less relay and hand-off times, thereby reducing overall time to delivery.

In theory, this approach is solid: the unified market will eliminate many of the downfalls caused by latency. In practice however, the market has yet to centralize with all/most demand sources bidding in the same auctions. So most app developers rely on a hybrid model encompassing both bidding and traditional waterfall methods.

Sidebar: Why doesn’t everyone bid in the same auction?

In short: you can learn a lot by watching someone’s buying patterns. The major platforms have developed extremely sophisticated data-gathering and targeting techniques. This allows them to find the right audience at the right time – reducing wasted impressions and therefore bringing down their cost. Exposing granular buying strategies increases competitive intelligence, inviting competition, which drives down advertiser margins.

Why does latency matter?

Latency increases the delay of ads – and sometimes the app content— in being delivered. This will minimize the exposure to the user, at best reducing advertising efficacy—and therefore earnings – at worst negatively impacting user-experience. It’s clear that less latency is better.

Latency Sources

Sheer complexity of ad tech means there are many factors that can cause or exacerbate latency. While individually effects might be minimal, their impacts are most often cumulative, and therefore are worth understanding in aggregate:

Photo by Edwin Andrade on Unsplash

The Ad Request:

The ad-request itself needs to invoke the third-party SDK, then send an API request to the network’s server. Mobile infrastructure can have significant delays in accessing the network-at-large.

Photo by Mr Cup / Fabien Barral on Unsplash

Network Response Processing:

Different types of demand, rated by speed:

1st party: also called direct traffic is the fastest type of ad to respond. It usually only requires a simple decision and lightweight response by the ad server. Best example: directly sold campaigns, backfill and cross-promotions.

2nd party: in this case the ad-server will access internal serving logic (e.g. a decision tree) to decide whether to fill the ad request. This is usually fairly quickly established because it’s most-often confined within the same company’s technology stack.

3rd party (auctions): The recipient server holds an auction among third-parties (DSPs) with a defined response timeline. (~60ms)

3rd party (relays): The recipient server sends out another ad request to another server—effectively starting the entire process over.

The Ad Delivery:

Bandwidth & Infrastructure: This can be impacted by the user’s connection speed, the region’s network infrastructure, or internet speed. For this reason, developing countries will often have a much higher impact from infrastructure latency.

Ad type: the weight and complexity of the ad type will factor into the delivery-time. Videos will take longer to load when compared to native or banner ads; CDNs can help but are often expensive.

Data center locations: the ad network’s location plays into the response as well. If a network has a single data center, this could negatively impact worldwide ad response-rate times.

Photo by Braden Collum on Unsplash

No-Bids, Relays & Hand-offs:

Waterfall Chains: As mentioned, the single largest impact to latency is the chained effect of multiple calls to different networks.

Lost Relays: As we covered in serving discrepancies, advertising isn’t simple. It relies on multiple, distributed technologies working in tandem and the simple fact is it doesn’t always work as intended. Communication between servers is lost, mis-routed or misplaced: ad requests and impressions go missing, no-bids aren’t sent and the waterfall chain will break. The main mediator is responsible for re-starting a lost ad request but this involves waiting for the response to time out. These timeouts can sometimes take multiple seconds to expire.

SDKs: The reason most networks require SDKs to serve ads is they want to control requests and delivery. This means for each relay in the waterfall the ad-request and response must come from, and be returned to the device. Compared to a server the connection speed of a mobile device is slower, the mobile processor is smaller and slower, so a chain of ad-requests can lead to massive delays.

JS Tags: Javascript tags are lessening in popularity for mobile, and it’s no surprise. Because tags rely on largely web-adopted standards, they aren’t well suited for mobile devices. Their timeouts, content delivery mechanisms and quality-standards are far poorer than other methods of serving mobile in-app ads. Some platforms have a staggering 10s timeout for tags.

Pre-caching is an important tool.

Full screen and some video ads allow pre-caching. This allows you to call and load the ad in the background, before the ad is shown to the user, thereby mitigating some of the negatives of latency. This isn’t as simple as it sounds, you’ll need to balance having the ad ready in time to display to the client without calling it too early—which will cause it to timeout and not be counted – or add undue network load to the end-user.

Sidebar: Why aren’t all ads pre-cached?

In a word: complexity and expense.

Banners, specifically, are a very high-volume business and pre-caching adds a significant burden on the advertiser. While this is relatively straight-forward for a request-and-show ad (fire a notification when the ad is shown) pre-caching means the ad network must wait and listen for the eventual “view” notification. This can take a lot of time and can add a significant burden to the server’s internal caching/memory needs.

Directly measuring latency

Latency can be very difficult to directly measure, since it’s caused by the combination of multiple technologies it’s not a metric that is easily reported on by the networks or mediators. Even at a single-impression-level, comparing timestamps between an “ad request” event and the “ad shown” impression event can reveal a latency issue, but won’t identify the actual source, as mediators don’t disclose the inner workings of the request auction.

Plus, because latency is often a result of distributed network behavior it’s difficult to replicate production latencies in a development environment. Often identifying a single network or line item with a latency problem requires a tedious process of elimination, turning on and off each line item and measuring any changes to the latency. This can be a frustrating and time consuming process, but a necessary one if latency is an ongoing concern.

Sidebar: Ad-serving costs aren’t negligible:

Remember an advertiser is trying to make a profit. If your app is generating many no-bids, running many auctions, or you’re requesting the delivery of ads – then not showing them, the demand source will factor these costs of delivery into the prices for your ad requests and your ad rates will suffer.

How to mitigate latency.

Latency is a necessary by-product of competitive pricing. App developers must strike a balance between granularity and efficiency to maximize revenue contribution: running lots of line items from multiple networks might yield a granular waterfall with maximum pricing competitiveness, but if the majority of those line items only yield a few pennies a day it may not be worth the trouble and ultimately lose revenue due to increased latency. So while it’s impossible to completely remove latency, it is possible to manage the impact through know-how, measurement and experimentation.

Experimentation

In an ideal situation you can allot traffic to two different strategies and experiment to find the best balance between pricing and delivery efficiency. What we do: start with two different waterfalls, divide traffic evenly and start to view on revenue-per ad request of the net-total.

Photo by Girl with red hat on Unsplash

Measurement

For many app developers the overhead of A/B testing requires too much overhead and effort. Don’t despair, by observing and applying some best practices you can do almost as good as perfect A/B testing. But beware fill/attempt rates can’t tell you everything. A low fill rate may have a high-impact on the waterfall. What do we do: balance fill with “cohort revenue contribution” – or put more simply the projected revenue contribution of the additional ad request.

Photo by Brett Jordan on Unsplash

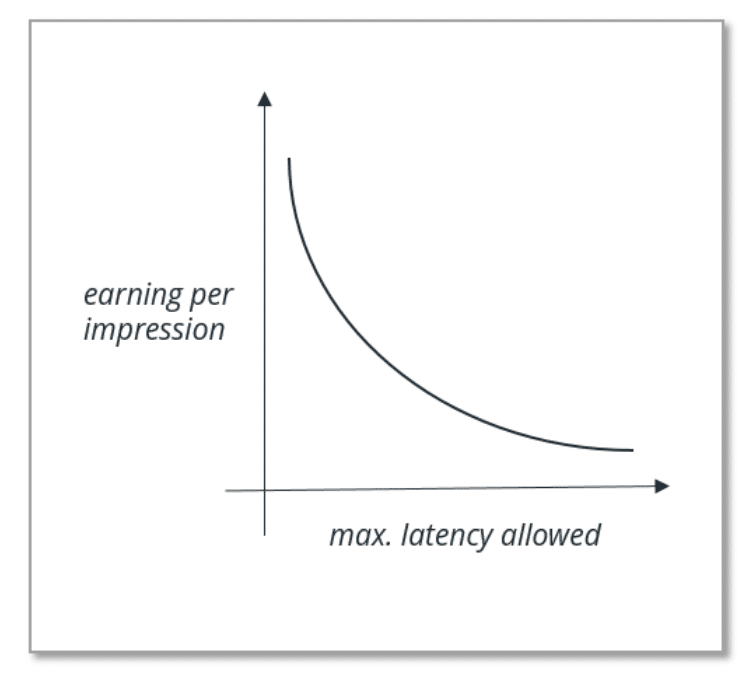

The latency paradox:

To further complicate an already confusing topic is a concept we call the latency paradox: simply put the more latency a single ad request generates, the less valuable that ad request is to ad buyers so latency matters less.

Or put another way: who cares if you add latency to an advertisement that’s paying you $0?.

Conclusion:

Much like ad serving discrepancies, ad serving latency is a multi-faceted technology challenge that needs balance, know-how, and patience to understand, discover and minimize. Our hope is this article gives you the guidance to understand, combat and deal with this this tricky subject in the mobile advertising ecosystem.